Assessing Reliability of Medical Record Reviews for the Detection of Hospital Adverse Events

Article information

Abstract

Objectives:

The purpose of this study was to assess the inter-rater reliability and intra-rater reliability of medical record review for the detection of hospital adverse events.

Methods:

We conducted two stages retrospective medical records review of a random sample of 96 patients from one acute-care general hospital. The first stage was an explicit patient record review by two nurses to detect the presence of 41 screening criteria (SC). The second stage was an implicit structured review by two physicians to identify the occurrence of adverse events from the positive cases on the SC. The inter-rater reliability of two nurses and that of two physicians were assessed. The intra-rater reliability was also evaluated by using test-retest method at approximately two weeks later.

Results:

In 84.2% of the patient medical records, the nurses agreed as to the necessity for the second stage review (kappa, 0.68; 95% confidence interval [CI], 0.54 to 0.83). In 93.0% of the patient medical records screened by nurses, the physicians agreed about the absence or presence of adverse events (kappa, 0.71; 95% CI, 0.44 to 0.97). When assessing intra-rater reliability, the kappa indices of two nurses were 0.54 (95% CI, 0.31 to 0.77) and 0.67 (95% CI, 0.47 to 0.87), whereas those of two physicians were 0.87 (95% CI, 0.62 to 1.00) and 0.37 (95% CI, -0.16 to 0.89).

Conclusions:

In this study, the medical record review for detecting adverse events showed intermediate to good level of inter-rater and intra-rater reliability. Well organized training program for reviewers and clearly defining SC are required to get more reliable results in the hospital adverse event study.

INTRODUCTION

Measuring the size of patient safety problem is the first step for enhancing patient safety [1]. Various methods and indicators are used to measure patient safety problems [2,3]. Incidence of adverse event (AE) is a representative indicator widely used for this measurement. The Harvard Medical Practice Study (HMPS), which provided a basis for raising patient safety as an important policy agenda in the US, defined AE as “an injury that was caused by medical management and that prolonged the hospitalization, produced a disability at the time of discharge, or both” [4]. As AEs are related to direct treatment outcomes, such as patient outcomes, length of stay, and medical expenditure, they are useful for measuring patient safety levels [5]. Furthermore, AEs include medical errors such as medication errors, comprehensively representing patient safety levels. Additionally, as AEs have been widely and continuously used as indicators of patient safety, the concept has been well established.

The HMPS examined patient medical records in New York State hospitals and evaluated AE occurrence, medical mistake or error occurrence, and patient disability level caused by AEs [4,6]. Many studies have since determined the incidence of AEs based on the HMPS methodology [7]. The biggest commonality is that evidence for AE occurrence was found by examining medical records. Specifically, previous studies applied a 2-stage examination method in which 2 nurses in the first stage and 2 physicians in the second stage examined medical records individually, taking the form of a sequential and independent review. Medical record review is commonly used to identify AE occurrence as medical records are easily accessible. However, it requires much time and necessitates commitment from medical professionals, resulting in higher cost than other methods [8]. Furthermore, unfaithful documentation can lead to underestimation of occurrence of AEs. However, the biggest shortcoming of medical record review for determining AEs is that the results may not be reliable if the consistency among different reviewers or even in a single reviewer is lacking [9]. Therefore, medical record review reliability should be examined and enhanced before conducting a study to measure AE incidence in hospitals [10].

In this study, we evaluated both intra-rater and inter-rater reliability for screening criteria (SC) detection and AE identification through medical record review.

METHODS

Study Design

A retrospective medical record review was conducted in a general hospital with approximately 500 beds. Review for AE identification was based on the HMPS methodology including a 2-stage examination (Figure 1) [4,6]. First, specific dates in 2007 were randomly selected using a random number table, and admissions of all patients discharged on those dates were selected as index admission. Psychiatric department admissions were excluded. Medical records from the entire duration of hospitalization and 1 year before and after were reviewed using a case review form developed in a previous study [11]. The first-stage review conducted by nurses screened medical records for 41 SC. These criteria encompassed events with high possibility of AE occurrence, such as antidote use, or those highly likely to lead to occurrence of additional AEs. The case review form included 41 SC chosen through the modified Delphi method from a combination of previously used SC [11]. These criteria can be divided into 19 items from the HMPS-format studies and 28 items from the Global Trigger Tool (GTT) (Supplemental Table 1). As opposed to the HMPS-type SC, which rely more heavily on reviewer’s clinical judgment, the GTT-type SC are more heavily based on objective clinical examination results [11]. For convenience of physicians while reviewing, nurse reviewers marked the corresponding medical record using a post-it when they found entries meeting the SC.

Two nurse reviewers independently reviewed medical records of the same patients. All cases determined by at least one nurse to meet the SC were included in the second-stage review for AE identification. Furthermore, to determine the occurrence of false negative results in the first-stage review, approximately 10% of cases that did not meet the SC during the first-stage review were randomly selected and included in the second-stage review. Two physician reviewers also independently reviewed medical records of the same patients to identify AE occurrence. Cases identified by both physician reviewers as having AEs were determined as AEs. In cases where opinions of the physician reviewers differed, a panel comprising professionals made the final call.

For evaluation of inter-rater reliability, approximately half of the patients from the primary investigation were selected for the secondary investigation using the previously discussed method. However, in the secondary investigation, the second-stage review was not conducted on cases that did not meet the SC during the first-stage review.

Reviewers and Reviewer Training

A total of four reviewers participated in this study: two physicians (P1 and P2) and two nurses (N1 and N2). First-stage reviewers were nurses with five or more years of clinical experience, and second-stage reviewers were specialists with ten or more years of clinical experience. Reviewers were trained for approximately two hours. Educational content consisted of understanding the concept of AEs, instructions on using the case review form, and a practice exercise using an actual medical record.

Statistical Analysis

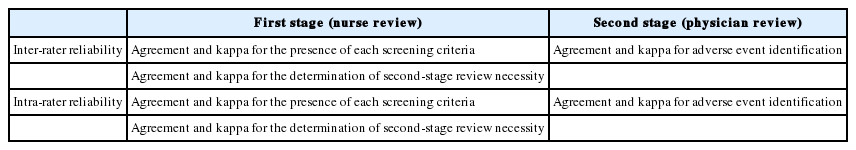

Inter-rater and intra-rater reliability of reviews of nurses and physicians was examined (Table 1). First, inter-rater reliability of reviews of nurses was analyzed for two different purposes: to assess the presence of SC and the necessity of the second-stage review. In evaluating the SC, agreements and kappa values were calculated for each of the 41 items of the SC. This represents reliability of decision of two nurses on whether or not the same patient meets a specific screening criterion. As patients determined to meet at least one screening criterion are subjected to the second-stage review, inter-rater reliability of nurses was also shown using agreements and kappa value for second-stage review necessity (last rows on Tables 2 and 3). It was then confirmed whether the reliability of reviews of nurses varied depending on SC characteristics. Using the aforementioned analytical method, differences in inter-rater reliability of nurses regarding second-stage review necessity based on SC characteristics were examined. In other words, cases corresponding to more than one screening criterion used in the HMPS-type studies and cases corresponding to more than one GTT-type screening criterion were examined separately. Six overlapping criteria were included in both groups.

Next, cases that at least one nurse determined to have at least one screening criterion and five randomly selected cases from those determined to have no screening criterion by reviews of two nurses were selected to investigate inter-rater reliability of physicians. AE occurrence was confirmed when calculating physicians’ inter-rater reliability. When one patient was determined to have 2 AEs, no examinations as to whether the contents were consistent with one another were done. Inter-rater reliability of physicians was calculated using agreement and kappa value for AE identification.

Using the results from the primary and secondary investigations, intra-rater reliability between reviews of nurses and physicians was determined. The time period for N1 and N2 was 13 to 14 days; 11 to 12 days for P1; and 14 days for P2. Using the same method as for inter-rater reliability, agreements and kappa values were determined. In all cases, agreements were calculated using the total number of cases in which decisions of both reviewers corresponded as a numerator and the total number of patients as a denominator.

SPSS version 21.0 (IBM Corp., Armonk, NY, USA) was used for all statistical analyses. The study was approved by the institutional review board of the National Evidence-based Healthcare Collaborating Agency (NECAIRB12-016-1).

RESULTS

Medical record review results are shown in Figure 1. The review was conducted on ninety-six patients discharged on three dates in 2007. Fifty-two patients were determined to have events satisfying at least one screening criterion by at least one nurse, and forty-four were determined by both nurses to meet none. Including the additional five individuals randomly selected from cases determined to have no screening criterion by reviews of the two nurses, physicians reviewed a total of fifty-seven patients. Eight patients were determined to have experienced AEs by both physician reviewers. Of the total 96 patients, 54 were re-investigated by nurses and 30 by physicians.

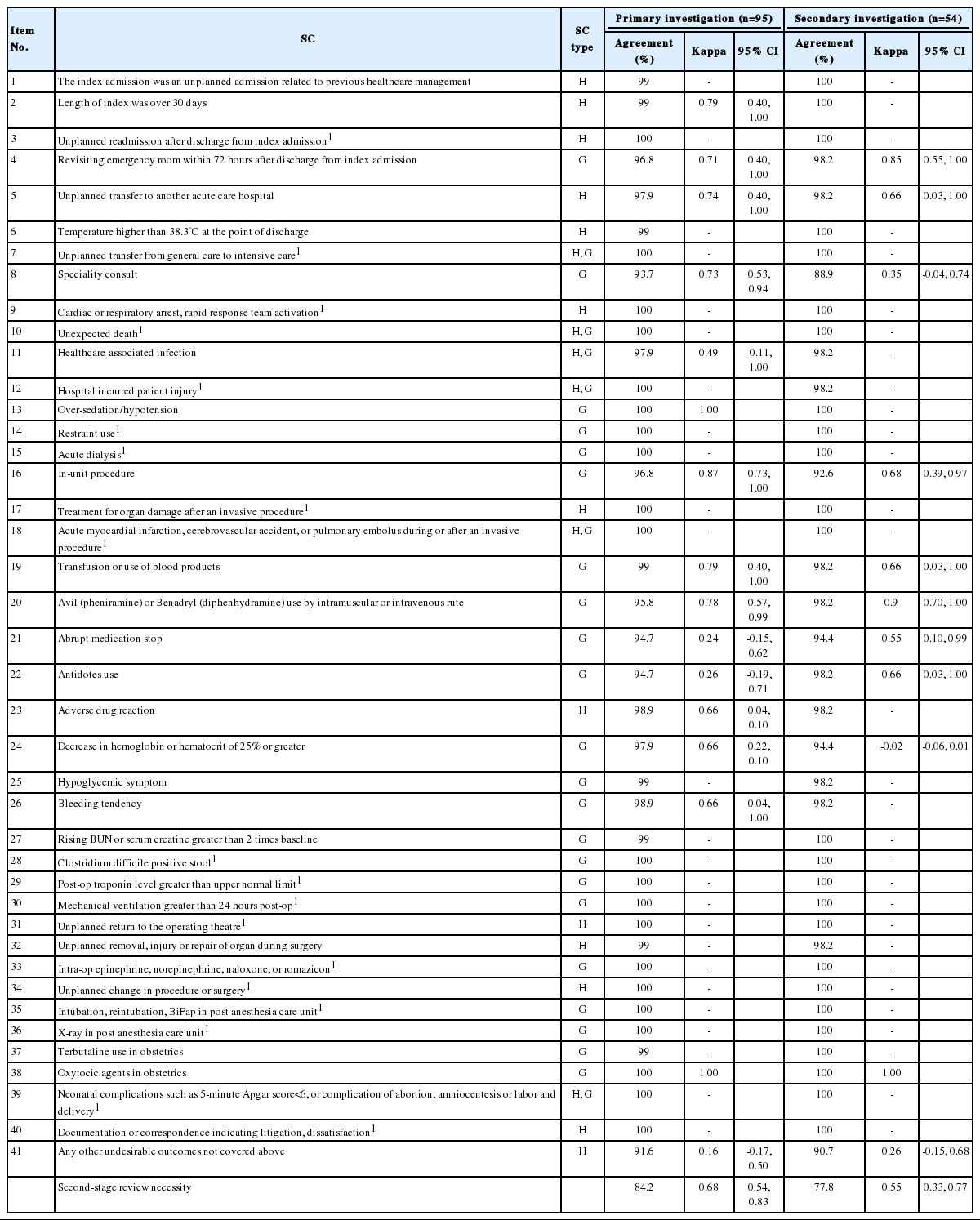

First, in looking at inter-rater reliability of nurses, agreements for the presence of each screening criterion ranged from 91.6% to 100% and the kappa values from 0.16 to 1.00 (Table 2). Due to the reviewers’ lack of observed frequency, kappa values were not proposed for some SC. Nineteen of the SC were not found in any of the ninety-six patients during the first-stage review. SC yielding kappa values of 0.4 or lower in the primary investigation were No. 41 (kappa, 0.16), No. 21 (kappa, 0.24), and No. 22 (kappa, 0.26). The kappa value for determination of second-stage review necessity in the primary investigation was 0.68 (95% confidence interval [CI], 0.54 to 0.83). In the secondary investigation, SC with kappa values of 0.4 or lower were No. 24 (kappa, -0.02), No. 41 (kappa, 0.26), No. 8 (kappa, 0.35). In the secondary investigation, the kappa value for determination of second-stage review necessity was 0.55 (95% CI, 0.33 to 0.77).

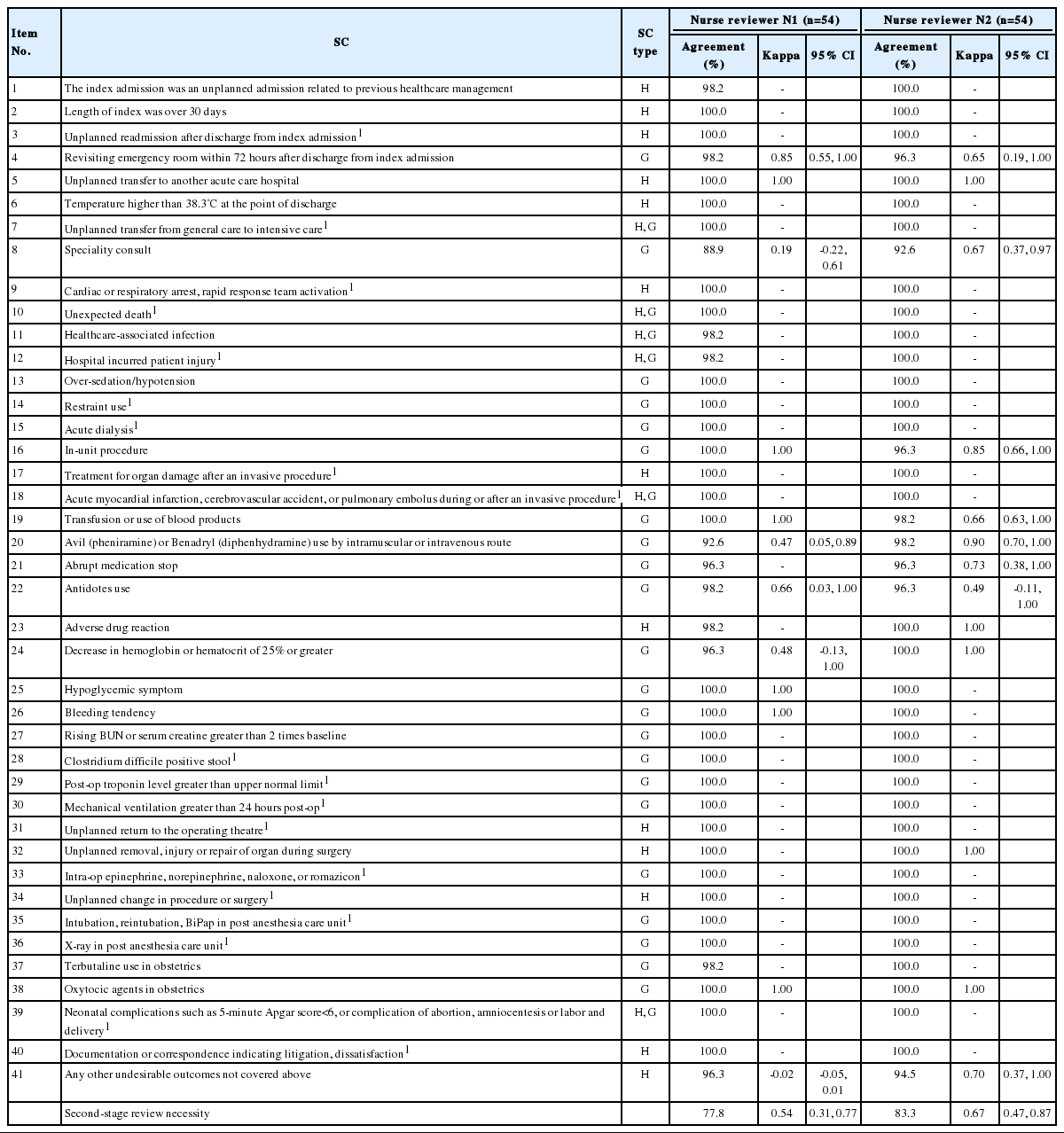

For intra-rater reliability, agreements for the presence of each screening criterion ranged from 88.9% to 100% for N1, and the kappa values were -0.02 to 1.00 (Table 3). SC with kappa values lower than 0.4 were No. 41 (kappa, -0.02), No. 8 (kappa, 0.19). The kappa value for second-stage review necessity was 0.54 (95% CI, 0.31 to 0.77). In the case of N2, agreements for the presence of each screening criterion ranged from 90.7% to 100%, and the kappa values ranged from 0.49 to 1.00. No SC had kappa values of 0.4 or less. The kappa value for second-stage review necessity was 0.67 (95% CI, 0.47 to 0.87).

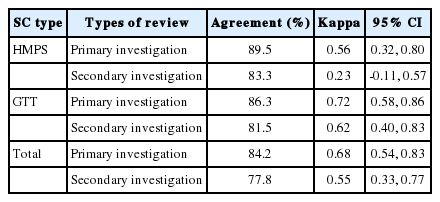

Looking at differences in reliability of reviews of nurses based on SC characteristics, the kappa values for reviews of nurses of SC used in the HMPS-type studies were 0.56 (95% CI, 0.32 to 0.80) for the primary investigation and 0.23 (95% CI, -0.11 to 0.57) for the secondary investigation (Table 4). On the other hand, the kappa values for reviews of nurses of the GTT-type SC were 0.72 (95% CI, 0.58 to 0.86) for the primary investigation and 0.62 (95% CI, 0.40 to 0.83) for the secondary investigation (Table 4).

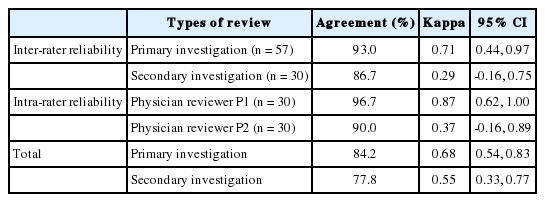

The kappa values for inter-rater reliability of physicians for detecting AE occurrence were 0.71 (95% CI, 0.44 to 0.97) for the primary investigation and 0.29 (95% CI, -0.16 to 0.75) for the secondary investigation (Table 5). In addition, intra-rater reliability of P1 for detecting AE occurrence for the secondary investigation of 30 individuals was represented by the kappa value of 0.87 (95% CI, 0.62 to 1.00) and that of P2 by the kappa value of 0.37 (95% CI, -0.16 to 0.89) (Table 5).

DISCUSSION

In this study, medical records reviews were performed to identify inter-rater and intra-rater reliability on detection of SC and AE occurrence by reviewers. In the case of inter-rater reliability of nurses, agreements for decision of second-stage review necessity were 84.2% in the primary investigation and 77.8% in the secondary investigation, and the kappa values were 0.68 (95% CI, 0.54 to 0.83) for the primary investigation and 0.55 (95% CI, 0.33 to 0.77) for the secondary investigation. Previous studies looking at inter-rater reliability of nurses using the same method showed an agreement of 84% and kappa value of 0.67 [12], an agreement of 82% and kappa value of 0.62 (95% CI, 0.54 to 0.69) [13], and a kappa value of 0.73 [14], all similar to inter-rater reliability of nurses determined in this study.

For inter-rater reliability of physicians, agreements on AE occurrence identification were 93.0% for the primary investigation and 86.7% for the secondary investigation, and the kappa values were 0.71 (95% CI, 0.44 to 0.97) for the primary investigation and 0.29 (95% CI, -0.16 to 0.75) for the secondary investigation. Previous studies analyzing inter-rate reliability of physicians using the same methods showed an agreement of 89% and a kappa value of 0.61 [4]; an agreement of 79% and a kappa value of 0.4 (95% CI, 0.3 to 0.5) [15]; an agreement of 76% and a kappa value of 0.25 (95% CI, 0.05 to 0.45) [13]; and a kappa value of 0.74 [14]. Comparing former results to this study, inter-rater reliability of physicians is shown to be relatively higher in the primary investigation and lower in the secondary investigation. Furthermore, kappa values for the secondary investigation for both nurse and physician raters were lower compared to those for the primary investigation. As CIs overlap, one must be cautious in interpreting such findings as significant, and a repeat of medical record reviews will be needed for future studies.

For intra-rater reliability, the kappa values of all reviewers except P2 were 0.4 or higher, showing intermediate to good agreement for N1 and N2, and excellent agreement for P1 [16]. This may be attributed to differences in reviewers’ medical record review experience. The 3 reviewers who showed intermediate to excellent agreement had more experience than the reviewer with a lower agreement level. As such, intra-rater reliability may increase as experience and training on medical record review for AE occurrence identification increase. In general, reliability of a measurement is affected by instrument variability, subject variability, and observer variability [17]. Reliability results in this study were analyzed considering such factors.

First, evaluation item characteristics were considered as instrument variability could have affected reliability. Intra-rater reliability of nurses was measured by determining the absence or presence of SC, which is less likely to involve subjective judgment of reviewers. Intra-rater reliability of physicians was determined by the absence or presence of AEs, which involves clinical decisions more so than determining the absence of presence of SC. Therefore, differences in identification of AEs were shown based on the clinical experience and knowledge of the physicians reviewers [18]. Considering such differences, setting specific examples of various AEs will be needed to ensure more consistent clinical decisions in physician reviewer training.

Second, as a subject variability, independence of medical record review affected reliability. When cases that met the SC were found, the nurse reviewers marked the corresponding medical records using a post-it for the convenience of physician reviewers. This could have given away clues while the nurses successively reviewed the medical records to find cases meeting the SC. Creating 2 copies of the medical records or utilizing electronic medical records with an anonymous system will solve this problem.

Third, as observer variability, experience and training of reviewers could have affected the reliability. Much like intra-rater reliability, differences in experience in reviewing medical records led to a reducing factor of inter-rater reliability. As the concept of AEs or SC are still new to health care professionals, relying only on reviewers with sufficient experience and training on patient safety-related medical record review will increase inter-rater reliability.

Overall, it can be indirectly inferred that studies determining AE incidence through medical record review can be conducted in South Korea (hereafter Korea). In addition, AE incidence in hospitals was found to be 8.3% (8/96), similar to the level in other studies [7,14]. This finding suggests that hospitals in Korea must pay more attention to patient safety and conduct additional studies to determine the size of patient safety problems within hospitals using sample patients or hospitals that can represent the whole nation.

Moreover, considering criticism on medical record review reliability [9], future studies must apply measures to increase reliability in determining AE occurrence. Such measures include standardizing measurement methods, training reviewers and raising their qualification levels, refining measurement tools, automating measurement tools, and repeating the measurements [17]. Considering these, measures to increase reliability in this study were determined as follows.

First, more thorough reviewer training will be needed to ensure their training and qualification levels. This study was thought to lack sufficient reviewer training, compared to other study, which conducted an education program for 3 days [19]. Reviewers with less experience and training in medical record review for detecting AEs are thought to be more greatly affected by it. In future studies, a much more thorough and standardized reviewer training should be conducted, such as reiterating the concept of AEs and repeatedly conducting medical record review exercises.

Second, to refine the measuring tool, clearer identification of each screening criterion will be needed. For example, for SC that may involve subjective evaluation, such as “No. 21. Abrupt medication stop”, clarifying operational definitions will help in increasing inter-rater reliability [20]. In this study, the GTT-type SC showed a slightly higher trend of reliability of reviews of nurses compared to the HMPS-type SC relying more heavily on the clinical experience of reviewers. The case review form should be improved by including more GTT-type SC while ensuring no reduction in its sensitivity. Furthermore, adding a question judging AE occurrences in reviews of nurses to aid decision-making of physicians should be considered to increase inter-rater reliability of physicians. Introducing scoring system of short-answer questions to judge AE occurrences may be considered, like the questions on causality and preventability of AE [21].

This study has limitations. First, there is a limitation in evaluating inter-rater reliability of nurses on each screening criterion due to the sample size. Nineteen of the SC were not found even once in the process of reviewing medical records of 96 individuals. It was difficult to determine whether this was due to SC characteristics or the small sample size. However, SC not commonly seen in other studies, such as intra-operative or post-operative death, may not have been witnesses even with a bigger sample size due to their characteristics [20]. Furthermore, it was difficult to evaluate reliability and validity of decisions on specific details regarding AEs due to the sample size.

Second, as the medical record review was conducted in a single hospital, hospital characteristics may have had an effect. For example, this study largely lacked SC related to surgeries; therefore, inter-rater reliability for such SC was difficult to evaluate. Future studies should further evaluate inter-rater reliability of nurses for specific SC. Moreover, the quality of medical records in the hospital may have affected the medical record review, which may ultimately impact the inter-rater reliability.

In conclusion, although reliability was not good in certain cases, intermediate or higher levels were shown for inter-rater and intra-rater reliability in AE identification through medical record review. In the future, when conducting a large-scale medical record review for AE identification, measures such as reviewer training enhancement and further clarification of the definition of SC should be considered to increase inter-rater reliability in detecting AEs.

ACKNOWLEDGEMENTS

This study was supported by a grant from the National Evidence-based Healthcare Collaborating Agency (no. NECA-M-12-002).

Notes

Conflict of Interest

The authors have no conflicts of interest with the material presented in this paper.

Supplementary Material

Types of screening criteria (SC)